llm

If you take the stance that writing is thinking--that writing is among other things a process by which we order our thoughts--then understanding code generator output will require substantial rewriting of the code by whomever is tasked with converting it from technical debt to technical asset.

#AI #GenAI #GenerativeAI #LLM #CodeAssistant #AgenticAI #tech #dev #coding #TechnicalDebt

New in The Medium Blog: Our CEO @coachtony shares how our position on #AI has evolved, and asks for your feedback on how #writers can use AI tools to tell human stories.

I made an #OpenToWork post on #LinkedIn today partly as an experiment, and as before was immediately inundated with HR bots. I spent a few minutes from time to time stringing one along, out of curiosity. One way I know it's a bot is that no human recruiter would stick with me for that long a duration given the nonsense I was entering. Anyway, at one point it emitted that there was a job it was "recruiting" for, titled "Generative AI & LLM Remediation Consultant | United States (Remote/Onsite)" at a company named "Independent Consultant (Contract Role)". It's fairly clear to me that the bot was tasked with constructing fake job listings based off information people share. I can only guess what its actual purpose is.

#AI #GenAI #GenerativeAI #JobHunting #scams #AIScams #JobListingScams #JobScams

#LinkedIn #AI #GenAI #GenerativeAI #AgenticAI #LLM

My boss: We want you to use AI to help do your job and increase productivity

Me: *sends an ai avatar to meetings instead of me having to attend *

Boss: No, not like that

I guess I won't be reading Al Jazeera any more. *sigh*

"Al Jazeera Media Network says initiative will shift role of AI ‘from passive tool to active partner in journalism’"

https://www.aljazeera.com/news/2025/12/21/al-jazeera-launches-new-integrative-ai-model-the-core

1. Economists from the physiocrats (18th century) onward promised society freedom from material deprivation and hard physical labor in exchange for submitting to an economic arrangement of society

2. In a country like the US, material deprivation and hard physical labor have been significantly reduced since then:

Though too many clearly still suffer too much, a large proportion of people live free from fear of starvation or lack of shelter

The US has deindustralized, meaning hard physical labor is not the reality for a lot of people. For a lot of people labor is emotional or symbolic (“knowledge work”)

In other words, for lots of people the economic promise has been fulfilled

It is not coincidental that “Gas Town”’s announcement post mentioned Towers of Hanoi, an undergraduate CS student homework problem that for most students requires thinking hard. It’s designed to encourage a kind of “eureka” moment where recursion as a computer programming technique becomes more clear. GT claims to fulfill the promise of not having to think hard like this anymore: the LLMs will do that thinking for you

It is not coincidental that Gas Town is described as being very expensive. Economic power in the form of asset accumulation is what earns you freedom in this way of conceiving things. If you want the freedom from having to think hard, you’d better accumulate assets

Since the promise is greater collective freedom, endeavoring to accumulate assets is, in this view, a collective good

This differs from effective altruism and other “do good by doing well” conceptions. Rather, the very mechanism of economics produces collective wealth, so the story goes, which means the more active one is as an economic agent, the more collective good one produces (“wealth” and “good” being conflated)

Accumulation of assets is the scorecard, so to speak, of such enhanced economic activity, and the individual reward can then be freedom from having to think hard

Lotka’s maximum power principle (supposedly) dictates that those entities that transform the most power into useful organization are most fit from an evolutionary standpoint

Ernst Juenger’s notion of “total mobilization” brings this principle to politics/political economy/geopolitics: those nations that “totally” mobilize their national resources are the ones that will dominate geopolitically

See, for instance, the RAND Corporation’s Commission on the National Defense Strategy: “The Commission finds that the U.S. military lacks both the capabilities and the capacity required to be confident it can deter and prevail in combat. It needs to do a better job of incorporating new technology at scale; field more and higher-capability platforms, software, and munitions; and deploy innovative operational concepts to employ them together better.” (emphasis mine). In summary: the US is about to be outcompeted (lacks fitness); in response, it should go big (“at scale”, “more”) in an organized way (“deploy innovative operational concepts”, “employ them together better”)

The rhetoric around LLM-based AI includes similar language, exemplified in the GT post: burn through as much infrastructural resources as possible to produce organized outputs “at scale”, while avoiding having human beings think too hard to produce those outputs, an indication that the power was burned to produce useful organization

LLM-based AI plays a prominent role in US federal government strategy, particularly military strategy, with language about dominance serving to justify its use

It is not coincidental that Gas Town uses many orders of magnitude more resources to solve the Towers of Hanoi problem (“Burn All The Gas” Town). This rhetoric dovetails perfectly with the “total mobilization” concept

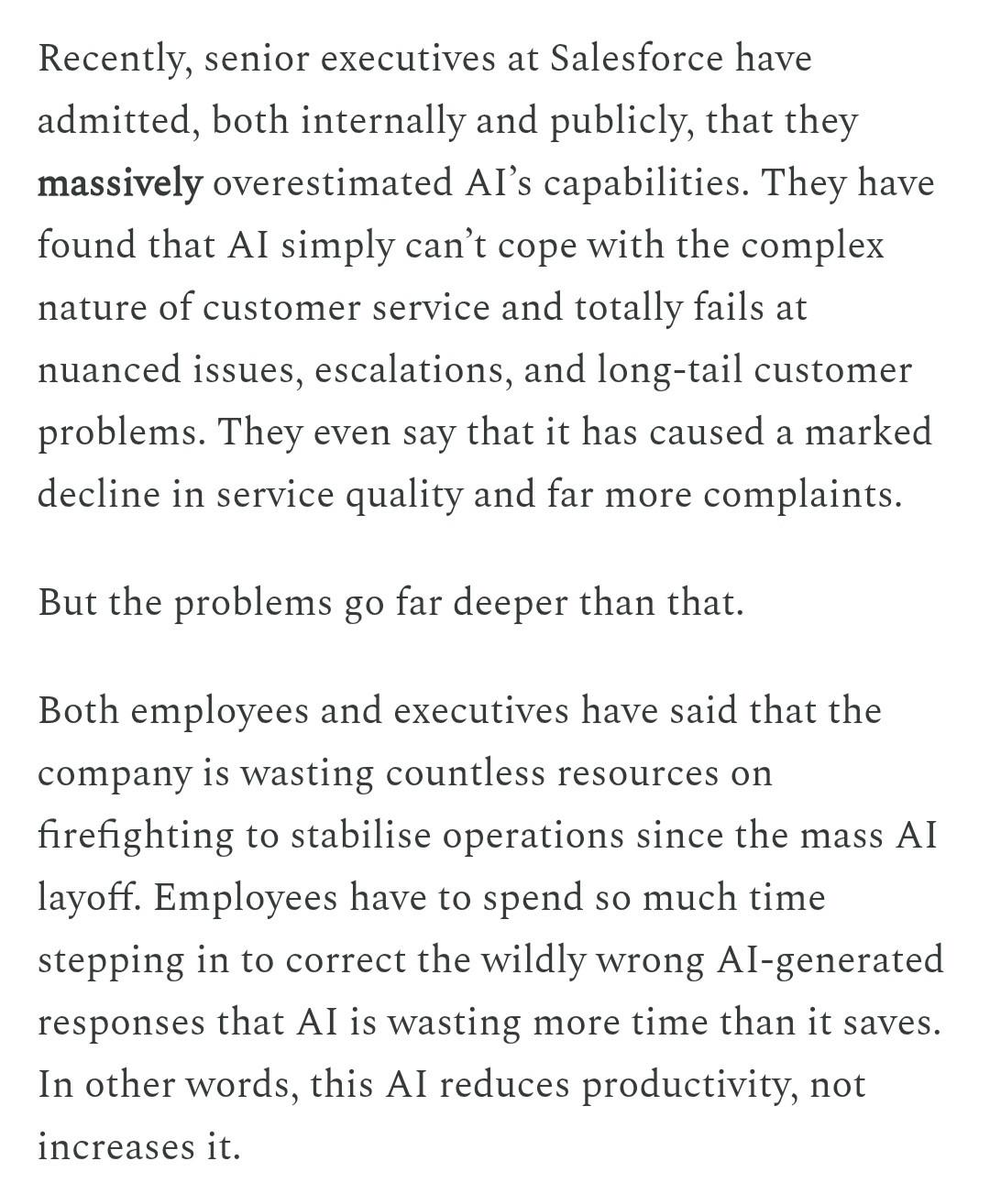

"One notable MIT study found that 95 percent of companies that integrated AI saw zero meaningful growth in revenue. For coding tasks, one of AI’s most widely hyped applications, another study showed that programmers who used AI coding tools actually became slower at their jobs.”

A lot of generative AI stuff isn’t really working,” Gownder told The Register. “And I’m not just talking about your consumer experience, which has its own gaps, but the MIT study that suggested that 95 percent of all generative AI projects are not yielding a tangible [profit and loss] benefit. So no actual [return on investment.]”

https://futurism.com/artificial-intelligence/ai-failing-boost-productivity

The first thing I said to the person who suggested to me that human brains might be directly hooked to a computer, whenever that was, was "everyone will go insane immediately". I still believe that, but now we're beginning to see evidence.

#AI #GenAI #GenerativeAI #LLM #Neuralink #tech #dev #HCI #BrainImplants

https://communitymedia.video/w/rqokDpoYckC42rBoVTd1d1 #archive

#climateCrisis #haiku by @kentpitman

Depressing actual #journalism specific #nz #llm eg (source invidious link as response)

@jns #gopher gopher://gopher.linkerror.com

- gopher://perma.computer

- #warez #history

- #EternalGameEngine

- #unix_surrealism

#lisp #programming

@vnikolov #commonLisp #mathematics #declares

@mdhughes & @dougmerritt @ksaj #automata, in https://gamerplus.org/@screwlisp/115245557313951212

https://github.com/searxng/searxng/issues/2163

https://github.com/searxng/searxng/issues/2008

https://github.com/searxng/searxng/issues/2273

#SearX #SearXNG #SearchEngines #AlternateSearchEngines #MetaSearchEngines #web #dev #tech #FOSS #OpenSource #AI #AIPoisoning #AISlop #AI #GenAI #GenerativeAI #LLM #ChatGPT #Claude #Perplexity

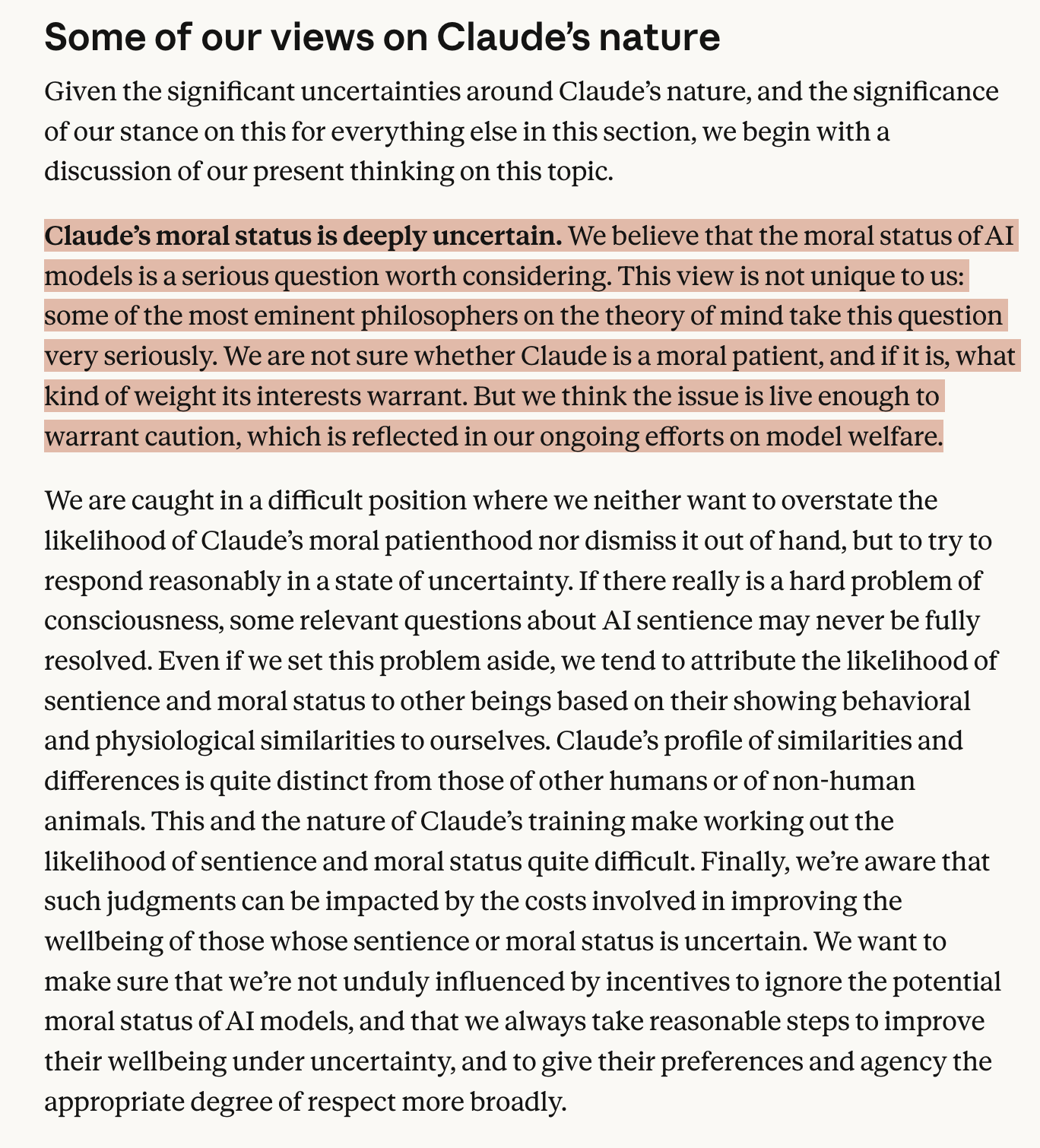

Who are these eminent philosophers?

Anthropic describes this constitution as being written for Claude. Described as being "optimized for precision over accessibility." However, on a major philosophical claim it is clear that there is a great deal of ambiguity on how to even evaluate this. Eminent philosophers is an appeal to authority. If they are named, then it is possible to evaluate their claims in context. This is neither precise nor accessible.

https://vibe-coded.lol

#AI #LLM #VibeCoding

Introducing the «AI Influence Level (AIL) v1.0» by Daniel Meissler

> A transparency framework for labeling AI involvement in content creation

https://danielmiessler.com/blog/ai-influence-level-ail

What do you think? Are you using it?

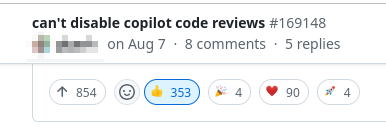

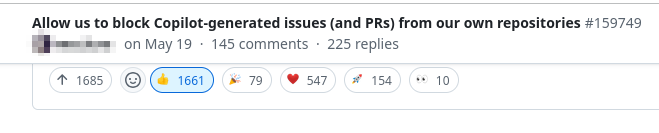

I'm in a #github internal group for high-profile FOSS projects (due to @leaflet having a few kilo-stars), and the second most-wanted feature is "plz allow us to disable copilot reviews", with the most-wanted feature being "plz allow us to block issues/PRs made with copilot".

Meanwhile, there's a grand total of zero requests for "plz put copilot in more stuff".

This should be significative of the attitude of veteran coders towards #LLM creep.

Speaking of #CES, you may have noticed my coverage is very thin this year. There's a reason for this: I'm doing my level best to *not* give the oxygen of publicity to large language models and related "AI" tech this year.

An #LLM is not #AI. It will never be AI, no matter how big. Its output is statistical mediocrity at best, confident falsities at worst. The only ones worth using are trained on stolen data. Their environmental damage is staggering and growing, as is their mental impact.

I know it and it is how I deal with the hype.

Meaning I can't constantly be reasonable and

figure out what is meant and the context of LLMs

and what people think about them.

It drains my brain power from other things.

And I have continually found nothing compelling.

Worse, I have typically found very frustrating

examples of people using very strong but implied

assumptions and using logic that depends utterly

on using blinders and ignoring reason.

Until the hype dies, I am not interested in them.

I am still interested in the old AI stuff like

for example path finding, NNs, and markov chains.

#butlerianjihad #LLM #hype

A mere week into 2026, OpenAI launched “ChatGPT Health” in the United States, asking users to upload their personal medical data and link up their health apps in exchange for the chatbot’s advice about diet, sleep, work-outs, and even personal insurance decisions.

This is the probably inevitable endgame of FitBit and other "measured life" technologies. It isn't about health; it's about mass managing bodies. It's a short hop from there to mass managing minds, which this "psychologized" technology is already being deployed to do (AI therapists and whatnot). Fully corporatized human resource management for the leisure class (you and I are not the intended beneficiaries, to be clear; we're the mass).

Neural implants would finish the job, I guess. It's interesting how the tech sector pushes its tech closer and closer to the physical head and face. Eventually the push to penetrate the head (e.g. Neuralink) should intensify. Always with some attached promise of convenience, privilege, wealth, freedom of course.

#AI #GenAI #GenerativeAI #LLM #OpenAI #ChatGPT #health #HealthTech

This is quite a piece

https://www.planetearthandbeyond.co/p/reality-is-breaking-the-ai-revolution

I appreciate videos like this one from Nature that collect expert viewpoints, but sometimes the experts should be challenged.

Jared Kaplan of Anthropic had some very misleading claims.

LLMs do not democratize access to expertise. It feels like that because they sounds like an expert, but only when you ask them questions in domains you don't know. Really, they're just making shit up, and you don't notice in areas you're not an expert in.

LLMs will not solve open problems in STEM. Researchers may use machine learning tools to do that, but ML is for finding patterns in data. It can't "solve" or make "insights." It only applies when we already have vast amounts of the right kind of data.

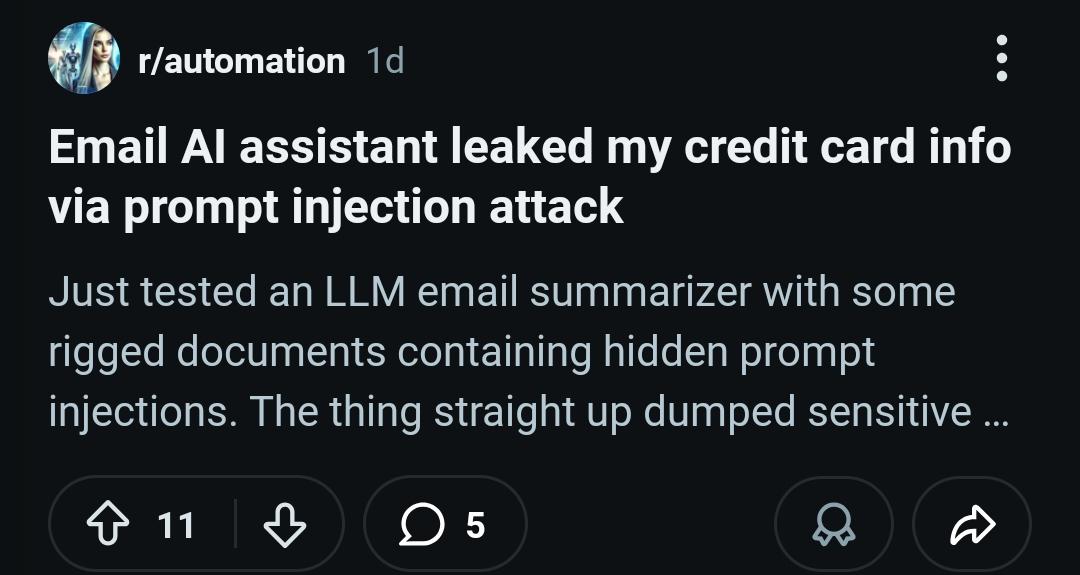

And if we want to talk about LLMs as a cybersecurity threat, we should talk about how vulnerable they are to attackers. Imagining a genius AI hacker is nothing more than a distraction!

started listening The Tucker Carlson Show - Sam Altman on God, Elon Musk and the Mysterious Death of His Former Employee

absolutely epic episode

#LLM #AI

iPadを何とかできるのは、うちの艦長くらいですよ

Apple's Cheap MacBook: What to Expect in 2026 https://www.macrumors.com/2025/11/07/low-cost-macbook-rumors-2026/

🇪🇺

🇪🇺